Racecar

Racecar is a lightweight Python library for sampling distributions in high dimensions using cutting-edge algorithms. It can also be used for rapid prototyping of novel methods and application to high dimensional use-cases. Pass a function evaluating the log posterior and/or its gradient, and away you go! Designed for use with stochastic gradients in mind. Ideal for usage with big data applications, neural networks, regression, mixture modelling, and all sorts of Bayesian inference and sampling problems.

Easily installed with pip via

pip install racecar

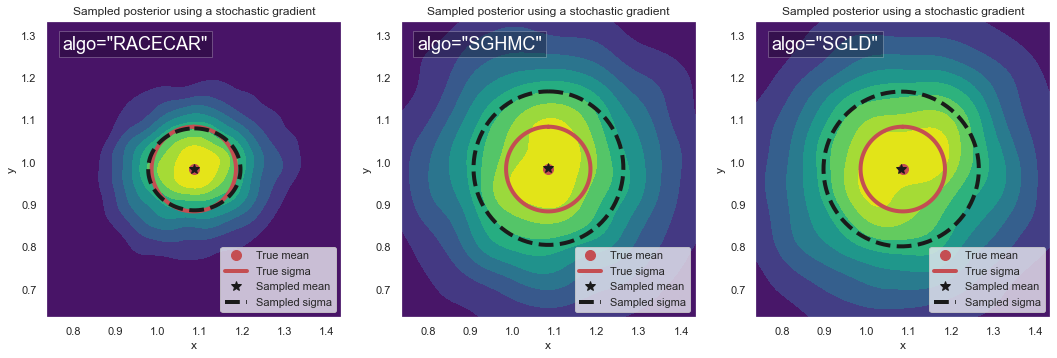

The algorithm learns the gradient noise covariance on-the-fly, and uses the learned representation to damp the dynamics and improve the sampling properties. At no extra computational cost, it offers a significant improvement over state of the art methods like SGHMC or SGLD.

The name RACECAR comes from the splitting of the Langevin dynamics into pieces A,C,E and R, and solving them in a palendromic order.

Check out the code and try out the algorithm and examples. Also take a look at another stochastic gradient scheme I have worked on, called NOGIN.